Making a machine do things—changing the state of a computer—is amazing and fascinating to me. We give a computer step-by-step instructions, and it follows them like a slave. That’s programming.

Ruby is a programming language—a fun one—but it has limitations in its architecture, most notably the GIL: the Global Interpreter Lock. The GIL forces the Ruby VM (specifically the MRI implementation) to execute code on a single CPU thread, no matter how many cores your machine has.

Because of this limitation, I never really settled with Ruby. I tried multiple languages: Go, OCaml (a little), and Elixir (a fantastic language, thanks to the BEAM VM). But I also found out the hard way that trying out many languages without mastering one leaves you a junior in all of them. A generalist who’s a master of none is essentially just a beginner wearing different hats.

So now I’m back to Ruby, to attempt to understand it better and maybe on a deeper level. This passion really clicked once I started learning about Ractors. Not because I urgently needed parallel programming constructs, but because (this might sound weird) I hate restrictions. I have a habit of premature optimization. I always want to write flawless, fast, efficient code—the best possible way to do something. In short, I’m a bit of a perfectionist.

But this perfectionism has a downside: it pulls you in different directions. You end up skimming many things and going deep into none. Ironically, that defeats the whole idea of being a perfectionist.

This marks the start of my journey to understand Ruby and its runtime on a deeper level.

OS Process and Threads

Before diving into how Ruby executes and runs programs, I want to refresh my understanding of OS processes and threads.

OS Process

So what is an OS process?

According to Wikipedia:

“A process is the instance of a computer program that is being executed by one or more threads.”

To put it simply:

A process is a running program.

When your compiled binary is sitting on disk, it’s inert. But when it runs, the OS allocates memory, assigns it CPU time, and manages its lifecycle. A typical process includes:

-

Program Code (Text Section): Stores the actual machine instructions.

-

Current Activity: Includes the program counter (PC), CPU registers—basically, the CPU’s current state (think of this like “state” in React).

-

Process Stack: Stores function calls and local variables. (Fun fact: this is where Stack Overflow got its name—when you overflow this stack.)

-

Heap: Unorganized memory that you can allocate at runtime (via

malloc, etc.). -

Data Section: Stores global and static variables.

Linear execution is easy to follow because the program has one clear path.

Let’s look at a basic C program as a demonstration:

#include <stdio.h>#include <stdlib.h>

const int AGE_LIMIT = 18; // Global – lives in the data section

// This function will have its own stack frame.int canVote(int age) { return age >= AGE_LIMIT;}

int main() { const int kaiserAge = 44; // This is stored in the Main functions stack frame.

int *pointerOnHeap = (int *) malloc(sizeof(int)); // Ask OS to give us "int" size of memory inside the heap, typically 4 bytes for int.

*pointerOnHeap = 12345; // Assign value to the memory location inside the heap.

if (canVote(kaiserAge)) { printf("Kaiser can vote\n"); } else { printf("Kaiser can't vote, he's too young\n"); }

printf("Data on heap: %d\n", *pointerOnHeap);

free(pointerOnHeap); // Hand over the heap memory back to the OS.

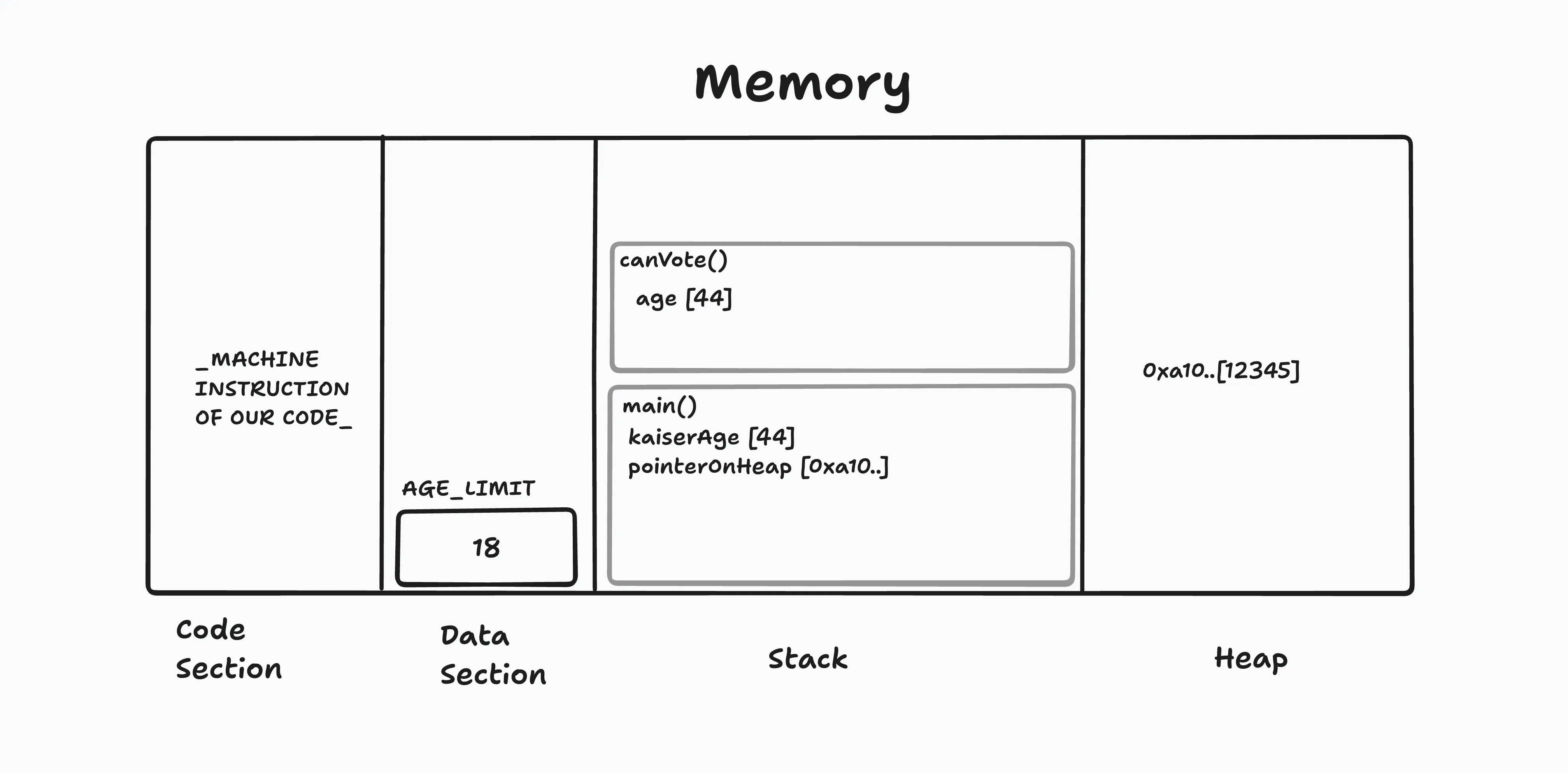

return 0;}Here is a overly simplified depiction of how the memory looks at the peak while the above program turns into a process:

This program has linear execution: main() calls canVote(), does some heap allocation, and exits. Simple. But that changes when we introduce threads.

Note: We’re skipping over I/O, file access (e.g.,

printf()), networking, and other OS interactions.

OS Threads

An OS process is heavyweight. The OS must allocate separate memory space, kernel structures, file descriptors, and scheduler metadata. This makes processes isolated and secure—but expensive.

Threads are the lightweight alternative.

A thread is a unit of execution inside a process.

Think of it like this: you have two functions that could be run side-by-side, but in a single-threaded program, they run one after the other. Threads allow the OS to schedule different parts of your program to run concurrently.

Let’s look at a basic multithreaded C example using POSIX threads:

#include <stdio.h#include <stdlib.h>#include <pthread.h>

int global_counter = 0;

void* thread_func(void* arg) { int thread_id = *(int*)arg; // Get the args and cast to int, as we supply it as an int. int local_var = 100; // Create a local variable, this is be created on the threads stack, inside this function stack frame. int* heap_data = (int*) malloc(sizeof(int)); // Ask OS for memory on the heap.

*heap_data = 500 + thread_id;

global_counter += 1; // We can easily access gloabl vars from the parent process.

printf("Thread %d: heap_data = %d\n", thread_id, *heap_data);

free(heap_data);

return NULL;}

int main() { pthread_t t1, t2; // Create pthread pointers on the stack. int id1 = 1, id2 = 2;

// Create an actual thread. // pthread_create(pthread_pointer, args to the pthread_create fn, function pointer, args to our function) pthread_create(&t1, NULL, thread_func, &id1); pthread_create(&t2, NULL, thread_func, &id2);

pthread_join(t1, NULL); // Wait for t1 to finish and join the main thread. pthread_join(t2, NULL);

printf("Final value of global_counter: %d\n", global_counter);

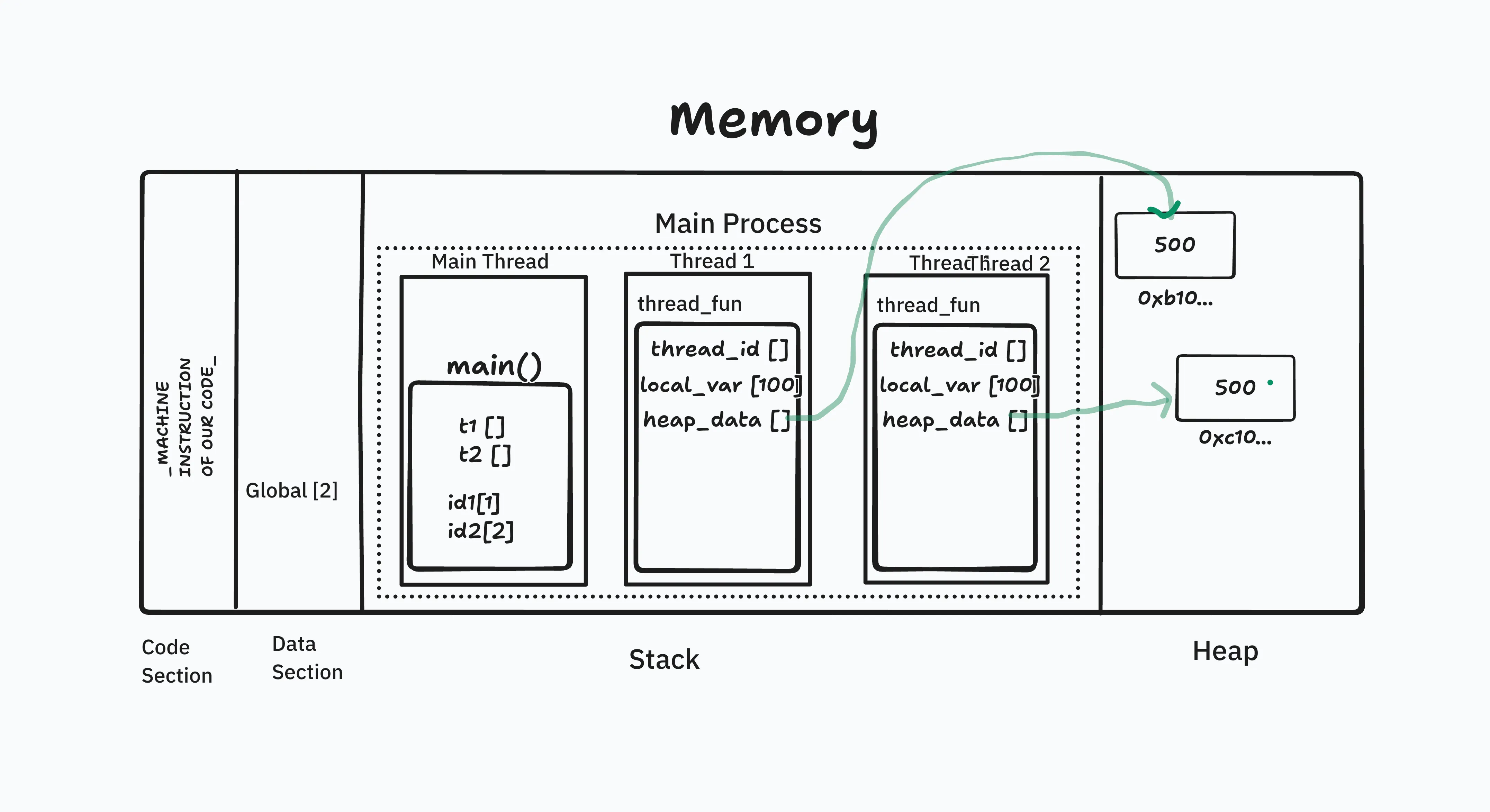

return 0;}Here is the simplified memory visualization of the above multi-threading program:

Note: Every process has a default thread, which executes our code. When our code does something, it is actually an OS thread that is executing it and it is called “main thread”.

But Why Threads Over Processes?

You might ask, “Kaiser, why use threads at all? Why not just use multiple processes since they’re more secure?”

Sure, you can spawn multiple processes using fork(), and that gives you fault isolation. But threads are useful—sometimes critical—because:

- They share memory, which makes communication fast and easy.

- They’re cheaper to create than processes.

- They’re lighter to schedule, which means less overhead for context switching.

- You can run them in parallel to solve parts of the same problem (if the runtime and hardware allow it).

A thread is often called a lightweight process because it reuses the resources of the parent process.

Note: Every process has a default thread, which executes our code. When our code does something, it is actually an OS thread that is executing it and it is called “main thread”.

Threads vs Processes

| Feature | Thread | Process |

|---|---|---|

| Definition | Lightweight unit of execution inside a process | Heavyweight, standalone execution unit |

| Memory Space | Shared among threads of the same process | Separate, isolated |

| Communication | Fast (shared memory, heap, globals) | Slow (requires IPC: pipes, sockets, etc.) |

| Creation Time | Fast (pthread_create) | Slower (fork, exec) |

| Context Switching | Light, fewer resources to store/restore | Heavy, more resources and state involved |

| Crash Impact | Can crash the whole process | Typically isolated—crash doesn’t affect others |

| Stack | Own stack; shared heap and globals | Completely separate stack, heap, data |

| Global Variables | Shared across threads | Not shared across processes |

| Use Case | Ideal for shared-memory concurrency | Ideal for fault isolation, sandboxing |

| OS-Scheduled? | Yes (kernel threads in Linux/macOS) | Yes |

The Ruby Process

Now that we understand processes and threads, let’s dive into how Ruby executes code on your CPU.

Ruby is an interpreted language. Unlike compiled languages like C or Go, Ruby code is not translated directly into machine code. Instead, Ruby code is parsed and converted into an intermediate representation that a virtual machine (VM) can execute.

What is the Ruby VM?

The Ruby VM (Virtual Machine), specifically YARV (Yet Another Ruby VM), is a program that acts like a virtual CPU with its own set of instructions and memory management. You can think of it as a software-based CPU and RAM that executes Ruby bytecode—an intermediate form of your Ruby code.

This bytecode is not low-level like assembly, but it’s also not plain Ruby code. It’s a simplified and optimized set of instructions tailored for the Ruby VM to process efficiently.

When you run a Ruby script, the following typically happens:

- Parsing – The Ruby interpreter reads your code and converts it into an Abstract Syntax Tree (AST).

- Compilation – The AST is compiled into bytecode.

- Execution – The Ruby VM executes the bytecode step by step, managing memory, threads, and method calls internally.

This entire flow happens at runtime, which is why Ruby is known for being flexible, but also slower compared to compiled languages.

How does Ruby VM start?

When we run a Ruby program (say, ruby main.rb), there are multiple things that happen before we get our Ruby code executed by the VM:

-

Startup: The OS creates a new native process by loading Ruby binary from disk. This is a standard UNIX process, and it gets its own memory space, file descriptors, and a least a one thread of execution (main thread).

-

Ruby VM Warm up: Once the OS process is up and running, Ruby sets up internal structures like memory managers (GC), global variables, and core classes. The Ruby VM (YARV) is initialized. This is not a separate OS process, it’s just a C library loaded into the same OS process.

-

Ruby create Main Thread: Ruby starts by creating one Ruby-level thread, which maps to the main native thread (typically a

pthreadon UNIX-like system).Note: Ruby lets you create new threads that directly map to native threads. We will see this in next article.

-

Execution: The Ruby script code is parsed into an AST, Complied into YARV bytecode, then executed by the VM. All of this happens inside the same process and initially on the native thread.

-

Garbage Collection: Ruby has its own garbage collector, which also runs in the same process. GC may use a separate native thread, but again it is in the same process.

Next, we will see little bit or fork() function, Ruby threads, Ractors and Fibers. Stay tuned.